Info-gap decision theory

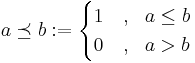

Info-gap decision theory is a non-probabilistic decision theory that seeks to optimize robustness to failure – or opportuneness for windfall – under severe uncertainty,[1][2] in particular applying sensitivity analysis of the stability radius type[3] to perturbations in the value of a given estimate of the parameter of interest. It has some connections with Wald's maximin model; some authors distinguish them, others consider them instances of the same principle.

It has been developed since the 1980s by Yakov Ben-Haim,[4] and has found many applications and described as a theory for decision-making under "severe uncertainty". It has been criticized as unsuited for this purpose, and alternatives proposed, including such classical approaches as robust optimization.

Summary

Info-gap is a decision theory: it seeks to assist in decision-making under uncertainty. It does this by using 3 models, each of which builds on the last. One begins with a model for the situation, where some parameter or parameters are unknown. One then takes an estimate for the parameter, which is assumed to be substantially wrong, and one analyzes how sensitive the outcomes under the model are to the error in this estimate.

- Uncertainty model

- Starting from the estimate, an uncertainty model measures how distant other values of the parameter are from the estimate: as uncertainty increases, the set of possible values increase – if one is this uncertain in the estimate, what other parameters are possible?

- Robustness/opportuneness model

- Given an uncertainty model and a minimum level of desired outcome, then for each decision, how uncertain can you be and be assured achieving this minimum level? (This is called the robustness of the decision.) Conversely, given a desired windfall outcome, how uncertain must you be for this desirable outcome to be possible? (This is called the opportuneness of the decision.)

- Decision-making model

- To decide, one optimizes either the robustness or the opportuneness, on the basis of the robustness or opportuneness model. Given a desired minimum outcome, which decision is most robust (can stand the most uncertainty) and still give the desired outcome (the robust-satisficing action)? Alternatively, given a desired windfall outcome, which decision requires the least uncertainty for the outcome to be achievable (the opportune-windfalling action)?

Models

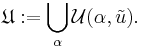

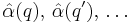

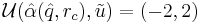

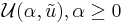

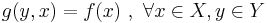

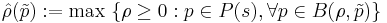

Info-gap theory models uncertainty  (the horizon of uncertainty) as nested subsets

(the horizon of uncertainty) as nested subsets  around a point estimate

around a point estimate  of a parameter: with no uncertainty, the estimate is correct, and as uncertainty increases, the subset grows, in general without bound. The subsets quantify uncertainty – the horizon of uncertainty measures the "distance" between an estimate and a possibility – providing an intermediate measure between a single point (the point estimate) and the universe of all possibilities, and giving a measure for sensitivity analysis: how uncertain can an estimate be and a decision (based on this incorrect estimate) still yield an acceptable outcome – what is the margin of error?

of a parameter: with no uncertainty, the estimate is correct, and as uncertainty increases, the subset grows, in general without bound. The subsets quantify uncertainty – the horizon of uncertainty measures the "distance" between an estimate and a possibility – providing an intermediate measure between a single point (the point estimate) and the universe of all possibilities, and giving a measure for sensitivity analysis: how uncertain can an estimate be and a decision (based on this incorrect estimate) still yield an acceptable outcome – what is the margin of error?

Info-gap is a local decision theory, beginning with an estimate and considering deviations from it; this contrasts with global methods such as minimax, which considers worst-case analysis over the entire space of outcomes, and probabilistic decision theory, which considers all possible outcomes, and assigns some probability to them. In info-gap, the universe of possible outcomes under consideration is the union of all of the nested subsets:

Info-gap analysis gives answers to such questions as:

- under what level of uncertainty can specific requirements be reliably assured (robustness), and

- what level of uncertainty is necessary to achieve certain windfalls (opportuneness).

It can be used for satisficing, as an alternative to optimizing in the presence of uncertainty or bounded rationality; see robust optimization for an alternative approach.

Comparison with classical decision theory

In contrast to probabilistic decision theory, info-gap analysis does not use probability distributions: it measures the deviation of errors (differences between the parameter and the estimate), but not the probability of outcomes – in particular, the estimate  is in no sense more or less likely than other points, as info-gap does not use probability. Info-gap, by not using probability distributions, is robust in that it is not sensitive to assumptions on probabilities of outcomes. However, the model of uncertainty does include a notion of "closer" and "more distant" outcomes, and thus includes some assumptions, and is not as robust as simply considering all possible outcomes, as in minimax. Further, it considers a fixed universe

is in no sense more or less likely than other points, as info-gap does not use probability. Info-gap, by not using probability distributions, is robust in that it is not sensitive to assumptions on probabilities of outcomes. However, the model of uncertainty does include a notion of "closer" and "more distant" outcomes, and thus includes some assumptions, and is not as robust as simply considering all possible outcomes, as in minimax. Further, it considers a fixed universe  so it is not robust to unexpected (not modeled) events.

so it is not robust to unexpected (not modeled) events.

The connection to minimax analysis has occasioned some controversy: (Ben-Haim 1999, pp. 271–2) argues that info-gap's robustness analysis, while similar in some ways, is not minimax worst-case analysis, as it does not evaluate decisions over all possible outcomes, while (Sniedovich, 2007) argues that the robustness analysis can be seen as an example of maximin (not minimax), applied to maximizing the horizon of uncertainty. This is discussed in criticism, below, and elaborated in the classical decision theory perspective.

Basic example: budget

As a simple example, consider a worker with uncertain income. They expect to make $100 per week, while if they make under $60 they will be unable to afford lodging and will sleep in the street, and if they make over $150 they will be able to afford a night's entertainment.

Using the info-gap absolute error model:

where  one would conclude that the worker's robustness function

one would conclude that the worker's robustness function  is $40, and their opportuneness function

is $40, and their opportuneness function  is $50: if they are certain that they will make $100, they will neither sleep in the street nor feast, and likewise if they make within $40 of $100. However, if they erred in their estimate by more than $40, they may find themselves on the street, while if they erred by more than $50, they may find themselves in clover.

is $50: if they are certain that they will make $100, they will neither sleep in the street nor feast, and likewise if they make within $40 of $100. However, if they erred in their estimate by more than $40, they may find themselves on the street, while if they erred by more than $50, they may find themselves in clover.

As stated, this example is only descriptive, and does not enable any decision making – in applications, one considers alternative decision rules, and often situations with more complex uncertainty.

Consider now the worker thinking of moving to a different town, where the work pays less but lodgings are cheaper. Say that here they estimate that they will earn $80 per week, but lodging only costs $44, while entertainment still costs $150. In that case the robustness function will be $36, while the opportuneness function will be $70. If they make the same errors in both cases, the second case (moving) is both less robust and less opportune.

On the other hand, if one measures uncertainty by relative error, using the fractional error model:

in the first case robustness is 40% and opportuneness is 50%, while in the second case robustness is 45% and opportuneness is 87.5%, so moving is more robust and less opportune.

This example demonstrates the sensitivity of analysis to the model of uncertainty.

Info-gap models

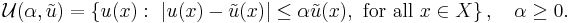

Info-gap can be applied to spaces of functions; in that case the uncertain parameter is a function  with estimate

with estimate  and the nested subsets are sets of functions. One way to describe such a set of functions is by requiring values of u to be close to values of

and the nested subsets are sets of functions. One way to describe such a set of functions is by requiring values of u to be close to values of  for all x, using a family of info-gap models on the values.

for all x, using a family of info-gap models on the values.

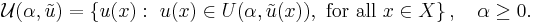

For example, the above fraction error model for values becomes the fractional error model for functions by adding a parameter x to the definition:

More generally, if  is a family of info-gap models of values, then one obtains an info-gap model of functions in the same way:

is a family of info-gap models of values, then one obtains an info-gap model of functions in the same way:

Motivation

It is common to make decisions under uncertainty.[note 1] What can be done to make good (or at least the best possible) decisions under conditions of uncertainty? Info-gap robustness analysis evaluates each feasible decision by asking: how much deviation from an estimate of a parameter value, function, or set, is permitted and yet "guarantee" acceptable performance? In everyday terms, the "robustness" of a decision is set by the size of deviation from an estimate that still leads to performance within requirements when using that decision. It is sometimes difficult to judge how much robustness is needed or sufficient. However, according to info-gap theory, the ranking of feasible decisions in terms of their degree of robustness is independent of such judgments.

Info-gap theory also proposes an opportuneness function which evaluates the potential for windfall outcomes resulting from favorable uncertainty.

Example: resource allocation

Here is an illustrative example, which will introduce the basic concepts of information gap theory. More rigorous description and discussion follows.

Resource allocation

Suppose you are a project manager, supervising two teams: red team and blue team. Each of the teams will yield some revenue at the end of the year. This revenue depends on the investment in the team – higher investments will yield higher revenues. You have a limited amount of resources, and you wish to decide how to allocate these resources between the two groups, so that the total revenues of the project will be as high as possible.

If you have an estimate of the correlation between the investment in the teams and their revenues, as illustrated in Figure 1, you can also estimate the total revenue as a function of the allocation. This is exemplified in Figure 2 – the left-hand side of the graph corresponds to allocating all resources to the red team, while the right-hand side of the graph corresponds to allocating all resources to the blue team. A simple optimization will reveal the optimal allocation – the allocation that, under your estimate of the revenue functions, will yield the highest revenue.

Introducing uncertainty

However, this analysis does not take uncertainty into account. Since the revenue functions are only a (possibly rough) estimate, the actual revenue functions may be quite different. For any level of uncertainty (or horizon of uncertainty) we can define an envelope within which we assume the actual revenue functions are. Higher uncertainty would correspond to a more inclusive envelope. Two of these uncertainty envelopes, surrounding the revenue function of the red team, are represented in Figure 3. As illustrated in Figure 4, the actual revenue function may be any function within a given uncertainty envelope. Of course, some instances of the revenue functions are only possible when the uncertainty is high, while small deviations from the estimate are possible even when the uncertainty is small.

These envelopes are called info-gap models of uncertainty, since they describe one's understanding of the uncertainty surrounding the revenue functions.

From the info-gap models (or uncertainty envelopes) of the revenue functions, we can determine an info-gap model for the total amount of revenues. Figure 5 illustrates two of the uncertainty envelopes defined by the info-gap model of the total amount of revenues.

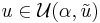

Robustness

Now, assume that as a project manager, high revenues will earn you the senior management's respect, but if the total revenues are below some threshold, it will mean your job. We will define such a threshold as a critical revenue, since total revenues beneath the critical revenue will be considered as failure.

For any given allocation, the robustness of the allocation, with respect to the critical revenue, is the maximal uncertainty that will still guarantee that the total revenue will exceed the critical revenue. This is demonstrated in Figure 6. If the uncertainty will increase, the envelope of uncertainty will become more inclusive, to include instances of the total revenue function that, for the specific allocation, yields a revenue smaller than the critical revenue.

The robustness measures the immunity of a decision to failure. A robust satisficer is a decision maker that prefers choices with higher robustness.

If, for some allocation  , we will illustrate the correlation between the critical revenue and the robustness, we will have a graph somewhat similar to Figure 7. This graph, called robustness curve of allocation

, we will illustrate the correlation between the critical revenue and the robustness, we will have a graph somewhat similar to Figure 7. This graph, called robustness curve of allocation  , has two important features, that are common to (most) robustness curves:

, has two important features, that are common to (most) robustness curves:

- The curve is non-increasing. This captures the notion that when we have higher requirements (higher critical revenue), we are less immune to failure (lower robustness). This is the tradeoff between quality and robustness.

- At the nominal revenue, that is, when the critical revenue equals the revenue under the nominal model (our estimate of the revenue functions), the robustness is zero. This is since a slight deviation from the estimate may decrease the total revenue.

If we compare the robustness curves of two allocations,  and

and  , it is not uncommon that the two curves will intersect, as illustrated in Figure 8. In this case, none of the allocations is strictly more robust than the other: for critical revenues smaller than the crossing point, allocation

, it is not uncommon that the two curves will intersect, as illustrated in Figure 8. In this case, none of the allocations is strictly more robust than the other: for critical revenues smaller than the crossing point, allocation  is more robust than allocation

is more robust than allocation  , while the other way around holds for critical revenues higher than the crossing point. That is, the preference between the two allocations depends on the criterion of failure – the critical revenue.

, while the other way around holds for critical revenues higher than the crossing point. That is, the preference between the two allocations depends on the criterion of failure – the critical revenue.

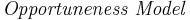

Opportuneness

Suppose, in addition to the threat of losing your job, the senior management offers you a carrot: if the revenues are higher than some revenue, you will be awarded a considerable bonus. Although revenues lower than this revenue will not be considered to be a failure (as you may still keep your job), a higher revenue will be considered a windfall success. We will therefore denote this threshold by windfall revenue.

For any given allocation, the opportuneness of the allocation, with respect to the critical revenue, is the minimal uncertainty for which it is possible for the total revenue to exceed the critical revenue. This is demonstrated in Figure 9. If the uncertainty will decrease, the envelope of uncertainty will become less inclusive, to exclude all instances of the total revenue function that, for the specific allocation, yields a revenue higher than the windfall revenue.

The opportuneness may be considered as the immunity to windfall success. Therefore, lower opportuneness is preferred to higher opportuneness.

If, for some allocation  , we will illustrate the correlation between the windfall revenue and the robustness, we will have a graph somewhat similar to Figure 10. This graph, called opportuneness curve of allocation

, we will illustrate the correlation between the windfall revenue and the robustness, we will have a graph somewhat similar to Figure 10. This graph, called opportuneness curve of allocation  , has two important features, that are common to (most) opportuneness curves:

, has two important features, that are common to (most) opportuneness curves:

- The curve is non-decreasing. This captures the notion that when we have higher requirements (higher windfall revenue), we are more immune to failure (higher opportuneness, which is less desirable). That is, we need a more substantial deviation from the estimate in order to achieve our ambitious goal. This is the tradeoff between quality and opportuneness.

- At the nominal revenue, that is, when the critical revenue equals the revenue under the nominal model (our estimate of the revenue functions), the opportuneness is zero. This is since no deviation from the estimate is needed in order to achieve the windfall revenue.

Treatment of severe uncertainty

The logic underlying the above illustration is that the (unknown) true revenue is somewhere in the immediate neighborhood of the (known) estimate of the revenue. For if this is not the case, what is the point of conducting the analysis exclusively in this neighborhood?

Therefore, to remind ourselves that info-gap's manifest objective is to seek robust solutions for problems that are subject to severe uncertainty, it is instructive to exhibit in the display of the results also those associated with the true value of the revenue. Of course, given the severity of the uncertainty we do not know the true value.

What we do know, however, is that according to our working assumptions the estimate we have is a poor indication of the true value of the revenue and is likely to be substantially wrong. So, methodologically speaking, we have to display the true value at a distance from its estimate. In fact, it would be even more enlightening to display a number of possible true values .

In short, methodolocially speaking the picture is this:

Note that in addition to the results generated by the estimate, two "possible" true values of the revenue are also displayed at a distance from the estimate.

As indicated by the picture, since info-gap robustness model applies its Maximin analysis in an immediate neighborhood of the estimate, there is no assurance that the analysis is in fact conducted in the neighborhood of the true value of the revenue. In fact, under conditions of severe uncertainty this—methodologically speaking—is very unlikely.

This raises the question: how valid/useful/meaningful are the results? Aren't we sweeping the severity of the uncertainty under the carpet?

For example, suppose that a given allocation is found to be very fragile in the neighborhood of the estimate. Does this means that this allocation is also fragile elsewhere in the region of uncertainty? Conversely, what guarantee is there that an allocation that is robust in the neighborhood of the estimate is also robust elsewhere in the region of uncertainty, indeed in the neighborhood of the true value of the revenue?

More fundamentally, given that the results generated by info-gap are based on a local revenue/allocation analysis in the neighborhood of an estimate that is likely to be substantially wrong, we have no other choice—methodologically speaking—but to assume that the results generated by this analysis are equally likely to be substantially wrong. In other words, in accordance with the universal Garbage In - Garbage Out Axiom, we have to assume that the quality of the results generated by info-gap's analysis is only as good as the quality of the estimate on which the results are based.

The picture speaks for itself.

What emerges then is that info-gap theory is yet to explain in what way, if any, it actually attempts to deal with the severity of the uncertainty under consideration. Subsequent sections of this article will address this severity issue and its methodological and practical implications.

A more detailed analysis of an illustrative numerical investment problem of this type can be found in Sniedovich (2007).

Uncertainty models

Info-gaps are quantified by info-gap models of uncertainty. An info-gap model is an unbounded family of nested sets. For example, a frequently encountered example is a family of nested ellipsoids all having the same shape. The structure of the sets in an info-gap model derives from the information about the uncertainty. In general terms, the structure of an info-gap model of uncertainty is chosen to define the smallest or strictest family of sets whose elements are consistent with the prior information. Since there is, usually, no known worst case, the family of sets may be unbounded.

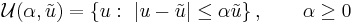

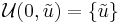

A common example of an info-gap model is the fractional error model. The best estimate of an uncertain function  is

is  , but the fractional error of this estimate is unknown. The following unbounded family of nested sets of functions is a fractional-error info-gap model:

, but the fractional error of this estimate is unknown. The following unbounded family of nested sets of functions is a fractional-error info-gap model:

At any horizon of uncertainty  , the set

, the set  contains all functions

contains all functions  whose fractional deviation from

whose fractional deviation from  is no greater than

is no greater than  . However, the horizon of uncertainty is unknown, so the info-gap model is an unbounded family of sets, and there is no worst case or greatest deviation.

. However, the horizon of uncertainty is unknown, so the info-gap model is an unbounded family of sets, and there is no worst case or greatest deviation.

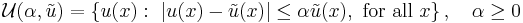

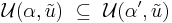

There are many other types of info-gap models of uncertainty. All info-gap models obey two basic axioms:

- Nesting. The info-gap model

is nested if

is nested if  implies that:

implies that:

- Contraction. The info-gap model

is a singleton set containing its center point:

is a singleton set containing its center point:

The nesting axiom imposes the property of "clustering" which is characteristic of info-gap uncertainty. Furthermore, the nesting axiom implies that the uncertainty sets  become more inclusive as

become more inclusive as  grows, thus endowing

grows, thus endowing  with its meaning as an horizon of uncertainty. The contraction axiom implies that, at horizon of uncertainty zero, the estimate

with its meaning as an horizon of uncertainty. The contraction axiom implies that, at horizon of uncertainty zero, the estimate  is correct.

is correct.

Recall that the uncertain element  may be a parameter, vector, function or set. The info-gap model is then an unbounded family of nested sets of parameters, vectors, functions or sets.

may be a parameter, vector, function or set. The info-gap model is then an unbounded family of nested sets of parameters, vectors, functions or sets.

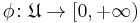

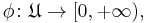

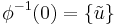

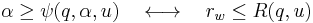

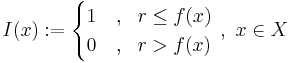

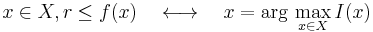

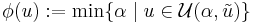

Sublevel sets

For a fixed point estimate  an info-gap model is often equivalent to a function

an info-gap model is often equivalent to a function  defined as:

defined as:

meaning "the uncertainty of a point u is the minimum uncertainty such that u is in the set with that uncertainty". In this case, the family of sets  can be recovered as the sublevel sets of

can be recovered as the sublevel sets of  :

:

meaning: "the nested subset with horizon of uncertainty  consists of all points with uncertainty less than or equal to

consists of all points with uncertainty less than or equal to  ".

".

Conversely, given a function  satisfying the axiom

satisfying the axiom  (equivalently,

(equivalently,  if and only if

if and only if  ), it defines an info-gap model via the sublevel sets.

), it defines an info-gap model via the sublevel sets.

For instance, if the region of uncertainty is a metric space, then the uncertainty function can simply be the distance,  so the nested subsets are simply

so the nested subsets are simply

This always defines an info-gap model, as distances are always non-negative (axiom of non-negativity), and satisfies  (info-gap axiom of contraction) because the distance between two points is zero if and only if they are equal (the identity of indiscernibles); nesting follows by construction of sublevel set.

(info-gap axiom of contraction) because the distance between two points is zero if and only if they are equal (the identity of indiscernibles); nesting follows by construction of sublevel set.

Not all info-gap models arise as sublevel sets: for instance, if  for all

for all  but not for

but not for  (it has uncertainty "just more" than 1), then the minimum above is not defined; one can replace it by an infimum, but then the resulting sublevel sets will not agree with the infogap model:

(it has uncertainty "just more" than 1), then the minimum above is not defined; one can replace it by an infimum, but then the resulting sublevel sets will not agree with the infogap model: ![u_1 \in \phi^{-1}([0,1]),](/2012-wikipedia_en_all_nopic_01_2012/I/6d57d6ce818bf0cda0f92b7dad950521.png) but

but  The effect of this distinction is very minor, however, as it modifies sets by less than changing the horizon of uncertainty by any positive number

The effect of this distinction is very minor, however, as it modifies sets by less than changing the horizon of uncertainty by any positive number  however small.

however small.

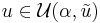

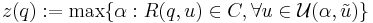

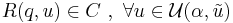

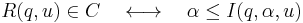

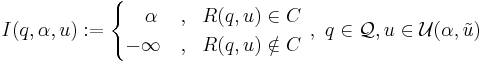

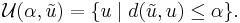

Robustness and opportuneness

Uncertainty may be either pernicious or propitious. That is, uncertain variations may be either adverse or favorable. Adversity entails the possibility of failure, while favorability is the opportunity for sweeping success. Info-gap decision theory is based on quantifying these two aspects of uncertainty, and choosing an action which addresses one or the other or both of them simultaneously. The pernicious and propitious aspects of uncertainty are quantified by two "immunity functions": the robustness function expresses the immunity to failure, while the opportuneness function expresses the immunity to windfall gain.

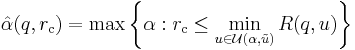

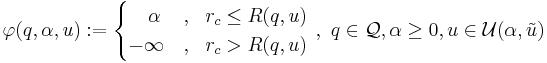

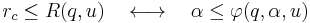

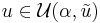

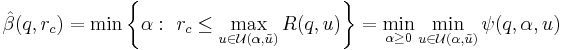

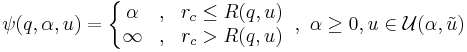

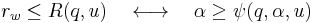

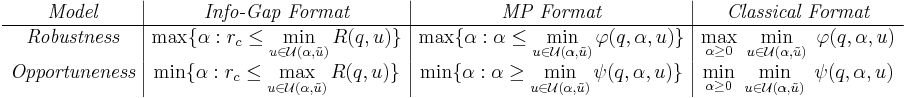

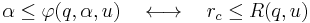

Robustness and opportuneness functions

The robustness function expresses the greatest level of uncertainty at which failure cannot occur; the opportuneness function is the least level of uncertainty which entails the possibility of sweeping success. The robustness and opportuneness functions address, respectively, the pernicious and propitious facets of uncertainty.

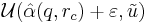

Let  be a decision vector of parameters such as design variables, time of initiation, model parameters or operational options. We can verbally express the robustness and opportuneness functions as the maximum or minimum of a set of values of the uncertainty parameter

be a decision vector of parameters such as design variables, time of initiation, model parameters or operational options. We can verbally express the robustness and opportuneness functions as the maximum or minimum of a set of values of the uncertainty parameter  of an info-gap model:

of an info-gap model:

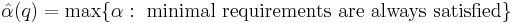

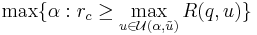

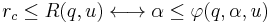

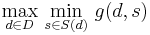

-

(robustness) (1a)

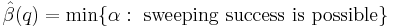

(opportuneness) (2a)

Formally,

-

(robustness) (1b)

(opportuneness) (2b)

We can "read" eq. (1) as follows. The robustness  of decision vector

of decision vector  is the greatest value of the horizon of uncertainty

is the greatest value of the horizon of uncertainty  for which specified minimal requirements are always satisfied.

for which specified minimal requirements are always satisfied.  expresses robustness — the degree of resistance to uncertainty and immunity against failure — so a large value of

expresses robustness — the degree of resistance to uncertainty and immunity against failure — so a large value of  is desirable. Robustness is defined as a worst-case scenario up to the horizon of uncertainty: how large can the horizon of uncertainty be and still, even in the worst case, achieve the critical level of outcome?

is desirable. Robustness is defined as a worst-case scenario up to the horizon of uncertainty: how large can the horizon of uncertainty be and still, even in the worst case, achieve the critical level of outcome?

Eq. (2) states that the opportuneness  is the least level of uncertainty

is the least level of uncertainty  which must be tolerated in order to enable the possibility of sweeping success as a result of decisions

which must be tolerated in order to enable the possibility of sweeping success as a result of decisions  .

.  is the immunity against windfall reward, so a small value of

is the immunity against windfall reward, so a small value of  is desirable. A small value of

is desirable. A small value of  reflects the opportune situation that great reward is possible even in the presence of little ambient uncertainty. Opportuneness is defined as a best-case scenario up to the horizon of uncertainty: how small can the horizon of uncertainty be and still, in the best case, achieve the windfall reward?

reflects the opportune situation that great reward is possible even in the presence of little ambient uncertainty. Opportuneness is defined as a best-case scenario up to the horizon of uncertainty: how small can the horizon of uncertainty be and still, in the best case, achieve the windfall reward?

The immunity functions  and

and  are complementary and are defined in an anti-symmetric sense. Thus "bigger is better" for

are complementary and are defined in an anti-symmetric sense. Thus "bigger is better" for  while "big is bad" for

while "big is bad" for  . The immunity functions — robustness and opportuneness — are the basic decision functions in info-gap decision theory.

. The immunity functions — robustness and opportuneness — are the basic decision functions in info-gap decision theory.

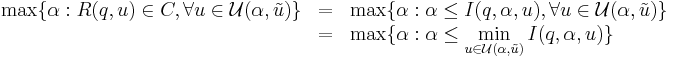

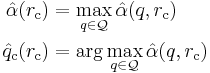

Optimization

The robustness function involves a maximization, but not of the performance or outcome of the decision: in general the outcome could be arbitrarily bad. Rather, it maximizes the level of uncertainty that would be required for the outcome to fail.

The greatest tolerable uncertainty is found at which decision  satisfices the performance at a critical survival-level. One may establish one's preferences among the available actions

satisfices the performance at a critical survival-level. One may establish one's preferences among the available actions  according to their robustnesses

according to their robustnesses  , whereby larger robustness engenders higher preference. In this way the robustness function underlies a satisficing decision algorithm which maximizes the immunity to pernicious uncertainty.

, whereby larger robustness engenders higher preference. In this way the robustness function underlies a satisficing decision algorithm which maximizes the immunity to pernicious uncertainty.

The opportuneness function in eq. (2) involves a minimization, however not, as might be expected, of the damage which can accrue from unknown adverse events. The least horizon of uncertainty is sought at which decision  enables (but does not necessarily guarantee) large windfall gain. Unlike the robustness function, the opportuneness function does not satisfice, it "windfalls". Windfalling preferences are those which prefer actions for which the opportuneness function takes a small value. When

enables (but does not necessarily guarantee) large windfall gain. Unlike the robustness function, the opportuneness function does not satisfice, it "windfalls". Windfalling preferences are those which prefer actions for which the opportuneness function takes a small value. When  is used to choose an action

is used to choose an action  , one is "windfalling" by optimizing the opportuneness from propitious uncertainty in an attempt to enable highly ambitious goals or rewards.

, one is "windfalling" by optimizing the opportuneness from propitious uncertainty in an attempt to enable highly ambitious goals or rewards.

Given a scalar reward function  , depending on the decision vector

, depending on the decision vector  and the info-gap-uncertain function

and the info-gap-uncertain function  , the minimal requirement in eq. (1) is that the reward

, the minimal requirement in eq. (1) is that the reward  be no less than a critical value

be no less than a critical value  . Likewise, the sweeping success in eq. (2) is attainment of a "wildest dream" level of reward

. Likewise, the sweeping success in eq. (2) is attainment of a "wildest dream" level of reward  which is much greater than

which is much greater than  . Usually neither of these threshold values,

. Usually neither of these threshold values,  and

and  , is chosen irrevocably before performing the decision analysis. Rather, these parameters enable the decision maker to explore a range of options. In any case the windfall reward

, is chosen irrevocably before performing the decision analysis. Rather, these parameters enable the decision maker to explore a range of options. In any case the windfall reward  is greater, usually much greater, than the critical reward

is greater, usually much greater, than the critical reward  :

:

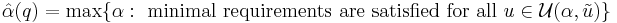

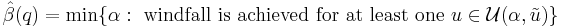

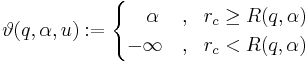

The robustness and opportuneness functions of eqs. (1) and (2) can now be expressed more explicitly:

-

(3)

(4)

is the greatest level of uncertainty consistent with guaranteed reward no less than the critical reward

is the greatest level of uncertainty consistent with guaranteed reward no less than the critical reward  , while

, while  is the least level of uncertainty which must be accepted in order to facilitate (but not guarantee) windfall as great as

is the least level of uncertainty which must be accepted in order to facilitate (but not guarantee) windfall as great as  . The complementary or anti-symmetric structure of the immunity functions is evident from eqs. (3) and (4).

. The complementary or anti-symmetric structure of the immunity functions is evident from eqs. (3) and (4).

These definitions can be modified to handle multi-criterion reward functions. Likewise, analogous definitions apply when  is a loss rather than a reward.

is a loss rather than a reward.

Decision rules

Based on these function, one can then decided on a course of action by optimizing for uncertainty: choose the decision which is most robust (can withstand the greatest uncertainty; "satisficing"), or choose the decision which requires the least uncertainty to achieve a windfall.

Formally, optimizing for robustness or optimizing for opportuneness yields a preference relation on the set of decisions, and the decision rule is the "optimize with respect to this preference".

In the below, let  be the set of all available or feasible decision vectors

be the set of all available or feasible decision vectors  .

.

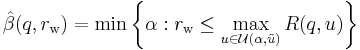

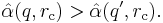

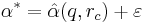

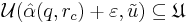

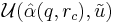

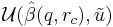

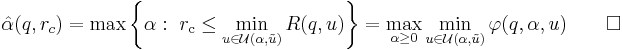

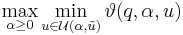

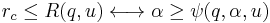

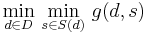

Robust-satisficing

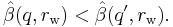

The robustness function generates robust-satisficing preferences on the options: decisions are ranked in increasing order of robustness, for a given critical reward, i.e., by  value, meaning

value, meaning  if

if

A robust-satisficing decision is one which maximizes the robustness and satisfices the performance at the critical level  .

.

Denote the maximum robustness by  (formally

(formally  for the maximum robustness for a given critical reward), and the corresponding decision (or decisions) by

for the maximum robustness for a given critical reward), and the corresponding decision (or decisions) by  (formally,

(formally,  the critical optimizing action for a given level of critical reward):

the critical optimizing action for a given level of critical reward):

Usually, though not invariably, the robust-satisficing action  depends on the critical reward

depends on the critical reward  .

.

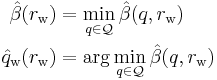

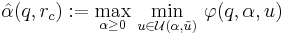

Opportune-windfalling

Conversely, one may optimize opportuneness: the opportuneness function generates opportune-windfalling preferences on the options: decisions are ranked in decreasing order of opportuneness, for a given windfall reward, i.e., by  value, meaning

value, meaning  if

if

The opportune-windfalling decision,  , minimizes the opportuneness function on the set of available decisions.

, minimizes the opportuneness function on the set of available decisions.

Denote the minimum opportuneness by  (formally

(formally  for the minimum opportuneness for a given windfall reward), and the corresponding decision (or decisions) by

for the minimum opportuneness for a given windfall reward), and the corresponding decision (or decisions) by  (formally,

(formally,  the windfall optimizing action for a given level of windfall reward):

the windfall optimizing action for a given level of windfall reward):

The two preference rankings, as well as the corresponding the optimal decisions  and

and  , may be different, and may vary depending on the values of

, may be different, and may vary depending on the values of  and

and

Applications

Info-gap theory has generated a lot of literature. Info-gap theory has been studied or applied in a range of applications including engineering [5] [6] [7] [8] [9] [10] [11] [12] [13] [14] [15] [16] [17] ,[18] biological conservation [19] [20] [21] [22] [23] [24] [25] [26] [27] [28] [29] ,[30] theoretical biology ,[31] homeland security ,[32] economics [33] [34] ,[35] project management [36] [37] [38] and statistics .[39] Foundational issues related to info-gap theory have also been studied [40] [41] [42] [43] [44] .[45]

The remainder of this section describes in a little more detail the kind of uncertainties addressed by info-gap theory. Although many published works are mentioned below, no attempt is made here to present insights from these papers. The emphasis is not upon elucidation of the concepts of info-gap theory, but upon the context where it is used and the goals.

Engineering

A typical engineering application is the vibration analysis of a cracked beam, where the location, size, shape and orientation of the crack is unknown and greatly influence the vibration dynamics.[9] Very little is usually known about these spatial and geometrical uncertainties. The info-gap analysis allows one to model these uncertainties, and to determine the degree of robustness - to these uncertainties - of properties such as vibration amplitude, natural frequencies, and natural modes of vibration. Another example is the structural design of a building subject to uncertain loads such as from wind or earthquakes.[8][10] The response of the structure depends strongly on the spatial and temporal distribution of the loads. However, storms and earthquakes are highly idiosyncratic events, and the interaction between the event and the structure involves very site-specific mechanical properties which are rarely known. The info-gap analysis enables the design of the structure to enhance structural immunity against uncertain deviations from design-base or estimated worst-case loads. Another engineering application involves the design of a neural net for detecting faults in a mechanical system, based on real-time measurements. A major difficulty is that faults are highly idiosyncratic, so that training data for the neural net will tend to differ substantially from data obtained from real-time faults after the net has been trained. The info-gap robustness strategy enables one to design the neural net to be robust to the disparity between training data and future real events.[11][13]

Biology

Biological systems are vastly more complex and subtle than our best models, so the conservation biologist faces substantial info-gaps in using biological models. For instance, Levy et al. [19] use an info-gap robust-satisficing "methodology for identifying management alternatives that are robust to environmental uncertainty, but nonetheless meet specified socio-economic and environmental goals." They use info-gap robustness curves to select among management options for spruce-budworm populations in Eastern Canada. Burgman [46] uses the fact that the robustness curves of different alternatives can intersect, to illustrate a change in preference between conservation strategies for the orange-bellied parrot.

Project management

Project management is another area where info-gap uncertainty is common. The project manager often has very limited information about the duration and cost of some of the tasks in the project, and info-gap robustness can assist in project planning and integration.[37] Financial economics is another area where the future is fraught with surprises, which may be either pernicious or propitious. Info-gap robustness and opportuneness analyses can assist in portfolio design, credit rationing, and other applications.[33]

Limitations

In applying info-gap theory, one must remain aware of certain limitations.

Firstly, info-gap makes assumptions, namely on universe in question, and the degree of uncertainty – the info-gap model is a model of degrees of uncertainty or similarity of various assumptions, within a given universe. Info-gap does not make probability assumptions within this universe – it is non-probabilistic – but does quantify a notion of "distance from the estimate". In brief, info-gap makes fewer assumptions than a probabilistic method, but does make some assumptions.

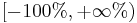

Further, unforeseen events (those not in the universe  ) are not incorporated: info-gap addresses modeled uncertainty, not unexpected uncertainty, as in black swan theory, particularly the ludic fallacy. This is not a problem when the possible events by definition fall in a given universe, but in real world applications, significant events may be "outside model". For instance, a simple model of daily stock market returns – which by definition fall in the range

) are not incorporated: info-gap addresses modeled uncertainty, not unexpected uncertainty, as in black swan theory, particularly the ludic fallacy. This is not a problem when the possible events by definition fall in a given universe, but in real world applications, significant events may be "outside model". For instance, a simple model of daily stock market returns – which by definition fall in the range  – may include extreme moves such as Black Monday (1987) but might not model the market breakdowns following the September 11 attacks: it considers the "known unknowns", not the "unknown unknowns". This is a general criticism of much decision theory, and is by no means specific to info-gap, but nor is info-gap immune to it.

– may include extreme moves such as Black Monday (1987) but might not model the market breakdowns following the September 11 attacks: it considers the "known unknowns", not the "unknown unknowns". This is a general criticism of much decision theory, and is by no means specific to info-gap, but nor is info-gap immune to it.

Secondly, there is no natural scale: is uncertainty of  small or large? Different models of uncertainty give different scales, and require judgment and understanding of the domain and the model of uncertainty. Similarly, measuring differences between outcomes requires judgment and understanding of the domain.

small or large? Different models of uncertainty give different scales, and require judgment and understanding of the domain and the model of uncertainty. Similarly, measuring differences between outcomes requires judgment and understanding of the domain.

Thirdly, if the universe under consideration is larger than a significant horizon of uncertainty, and outcomes for these distant points is significantly different from points near the estimate, then conclusions of robustness or opportuneness analyses will generally be: "one must be very confident of one's assumptions, else outcomes may be expected to vary significantly from projections" – a cautionary conclusion.

Disclaimer and Summary

The robustness and opportuneness functions can inform decision. For example, a change in decision increasing robustness may increase or decrease opportuneness. From a subjective stance, robustness and opportuneness both trade-off against aspiration for outcome: robustness and opportuneness deteriorate as the decision maker's aspirations increase. Robustness is zero for model-best anticipated outcomes. Robustness curves for alternative decisions may cross as a function of aspiration, implying reversal of preference.

Various theorems identify conditions where larger info-gap robustness implies larger probability of success, regardless of the underlying probability distribution. However, these conditions are technical, and do not translate into any common-sense, verbal recommendations, limiting such applications of info-gap theory by non-experts.

Criticism

A general criticism of non-probabilistic decision rules, discussed in detail at decision theory: alternatives to probability theory, is that optimal decision rules (formally, admissible decision rules) can always be derived by probabilistic methods, with a suitable utility function and prior distribution (this is the statement of the complete class theorems), and thus that non-probabilistic methods such as info-gap are unnecessary and do not yield new or better decision rules.

A more general criticism of decision making under uncertainty is the impact of outsized, unexpected events, ones that are not captured by the model. This is discussed particularly in black swan theory, and info-gap, used in isolation, is vulnerable to this, as are a fortiori all decision theory that uses a fixed universe of possibilities, notably probabilistic ones.

In criticism specific to info-gap, Sniedovich[47] raises two objections to info-gap decision theory, one substantive, one scholarly:

- 1. the info-gap uncertainty model is flawed and oversold

- Info-gap models uncertainty via a nested family of subsets around a point estimate, and is touted as applicable under situations of "severe uncertainty". Sniedovich argues that under severe uncertainty, one should not start from a point estimate, which is assumed to be seriously flawed: instead the set one should consider is the universe of possibilities, not subsets thereof. Stated alternatively, under severe uncertainty, one should use global decision theory (consider the entire region of uncertainty), not local decision theory (starting with a point estimate and considering deviations from it).

- 2. info-gap is maximin

- Ben-Haim (2006, p.xii) claims that info-gap is "radically different from all current theories of decision under uncertainty," while Sniedovich argues that info-gap's robustness analysis is precisely maximin analysis of the horizon of uncertainty. By contrast, Ben-Haim states (Ben-Haim 1999, pp. 271–2) that "robust reliability is emphatically not a [min-max] worst-case analysis". Note that Ben-Haim compares info-gap to minimax, while Sniedovich considers it a case of maximin.

Sniedovich has challenged the validity of info-gap theory for making decisions under severe uncertainty. He questions the effectiveness of info-gap theory in situations where the best estimate  is a poor indication of the true value of

is a poor indication of the true value of  . Sniedovich notes that the info-gap robustness function is "local" to the region around

. Sniedovich notes that the info-gap robustness function is "local" to the region around  , where

, where  is likely to be substantially in error. He concludes that therefore the info-gap robustness function is an unreliable assessment of immunity to error.

is likely to be substantially in error. He concludes that therefore the info-gap robustness function is an unreliable assessment of immunity to error.

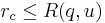

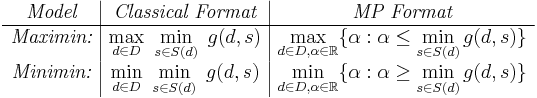

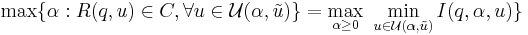

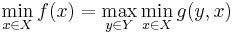

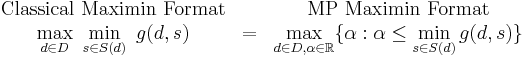

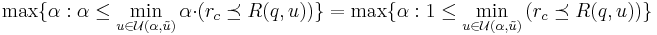

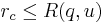

Maximin

Sniedovich argues that info-gap's robustness model is maximin analysis of, not the outcome, but the horizon of uncertainty: it chooses an estimate such that one maximizes the horizon of uncertainty  such that the minimal (critical) outcome is achieved, assuming worst-case outcome for a particular horizon. Symbolically, max

such that the minimal (critical) outcome is achieved, assuming worst-case outcome for a particular horizon. Symbolically, max  assuming min (worst-case) outcome, or maximin.

assuming min (worst-case) outcome, or maximin.

In other words, while it is not a maximin analysis of outcome over the universe of uncertainty, it is a maximin analysis over a properly construed decision space.

Ben-Haim argues that info-gap's robustness model is not min-max/maximin analysis because it is not worst case analysis of outcomes; it is a satisficing model, not an optimization model – a (straight-forward) maximin analysis would consider worst-case outcomes over the entire space which, since uncertainty is often potentially unbounded, would yield an unbounded bad worst case.

Stability radius

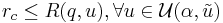

Sniedovich[3] has shown that info-gap's robustness model is a simple stability radius model, namely a local stability model of the generic form

where  denotes a ball of radius

denotes a ball of radius  centered at

centered at  and

and  denotes the set of values of

denotes the set of values of  that satisfy pre-determined stability conditions.

that satisfy pre-determined stability conditions.

In other words, info-gap's robustness model is a stability radius model characterized by a stability requirement of the form  . Since stability radius models are designed for the analysis of small perturbations in a given nominal value of a parameter, Sniedovich[3] argues that info-gap's robustness model is unsuitable for the treatment of severe uncertainty characterized by a poor estimate and a vast uncertainty space.

. Since stability radius models are designed for the analysis of small perturbations in a given nominal value of a parameter, Sniedovich[3] argues that info-gap's robustness model is unsuitable for the treatment of severe uncertainty characterized by a poor estimate and a vast uncertainty space.

Discussion

Satisficing and bounded rationality

It is correct that the info-gap robustness function is local, and has restricted quantitative value in some cases. However, a major purpose of decision analysis is to provide focus for subjective judgments. That is, regardless of the formal analysis, a framework for discussion is provided. Without entering into any particular framework, or characteristics of frameworks in general, discussion follows about proposals for such frameworks.

Simon [48] introduced the idea of bounded rationality. Limitations on knowledge, understanding, and computational capability constrain the ability of decision makers to identify optimal choices. Simon advocated satisficing rather than optimizing: seeking adequate (rather than optimal) outcomes given available resources. Schwartz ,[49] Conlisk [50] and others discuss extensive evidence for the phenomenon of bounded rationality among human decision makers, as well as for the advantages of satisficing when knowledge and understanding are deficient. The info-gap robustness function provides a means of implementing a satisficing strategy under bounded rationality. For instance, in discussing bounded rationality and satisficing in conservation and environmental management, Burgman notes that "Info-gap theory ... can function sensibly when there are 'severe' knowledge gaps." The info-gap robustness and opportuneness functions provide "a formal framework to explore the kinds of speculations that occur intuitively when examining decision options." [51] Burgman then proceeds to develop an info-gap robust-satisficing strategy for protecting the endangered orange-bellied parrot. Similarly, Vinot, Cogan and Cipolla [52] discuss engineering design and note that "the downside of a model-based analysis lies in the knowledge that the model behavior is only an approximation to the real system behavior. Hence the question of the honest designer: how sensitive is my measure of design success to uncertainties in my system representation? ... It is evident that if model-based analysis is to be used with any level of confidence then ... [one must] attempt to satisfy an acceptable sub-optimal level of performance while remaining maximally robust to the system uncertainties."[52] They proceed to develop an info-gap robust-satisficing design procedure for an aerospace application.

Alternatives

Of course, decision in the face of uncertainty is nothing new, and attempts to deal with it have a long history. A number of authors have noted and discussed similarities and differences between info-gap robustness and minimax or worst-case methods [7][16][35][37] [53] .[54] Sniedovich [47] has demonstrated formally that the info-gap robustness function can be represented as a maximin optimization, and is thus related to Wald's minimax theory. Sniedovich [47] has claimed that info-gap's robustness analysis is conducted in the neighborhood of an estimate that is likely to be substantially wrong, concluding that the resulting robustness function is equally likely to be substantially wrong.

On the other hand, the estimate is the best one has, so it is useful to know if it can err greatly and still yield an acceptable outcome. This critical question clearly raises the issue of whether robustness (as defined by info-gap theory) is qualified to judge whether confidence is warranted,[5][55] [56] and how it compares to methods used to inform decisions under uncertainty using considerations not limited to the neighborhood of a bad initial guess. Answers to these questions vary with the particular problem at hand. Some general comments follow.

Sensitivity analysis

Sensitivity analysis – how sensitive conclusions are to input assumptions – can be performed independently of a model of uncertainty: most simply, one may take two different assumed values for an input and compares the conclusions. From this perspective, info-gap can be seen as a technique of sensitivity analysis, though by no means the only.

Robust optimization

The robust optimization literature [57][58][59][60][61][62] provides methods and techniques that take a global approach to robustness analysis. These methods directly address decision under severe uncertainty, and have been used for this purpose for more than thirty years now. Wald's Maximin model is the main instrument used by these methods.

The principal difference between the Maximin model employed by info-gap and the various Maximin models employed by robust optimization methods is in the manner in which the total region of uncertainty is incorporated in the robustness model. Info-gap takes a local approach that concentrates on the immediate neighborhood of the estimate. In sharp contrast, robust optimization methods set out to incorporate in the analysis the entire region of uncertainty, or at least an adequate representation thereof. In fact, some of these methods do not even use an estimate.

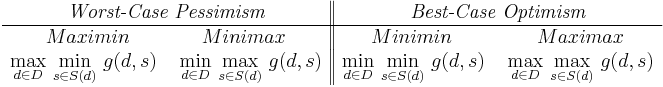

Comparative analysis

Classical decision theory,[63][64] offers two approaches to decision-making under severe uncertainty, namely maximin and Laplaces' principle of insufficient reason (assume all outcomes equally likely); these may be considered alternative solutions to the problem info-gap addresses.

Further, as discussed at decision theory: alternatives to probability theory, probabilists, particularly Bayesians probabilists, argue that optimal decision rules (formally, admissible decision rules) can always be derived by probabilistic methods (this is the statement of the complete class theorems), and thus that non-probabilistic methods such as info-gap are unnecessary and do not yield new or better decision rules.

Maximin

As attested by the rich literature on robust optimization, maximin provides a wide range of methods for decision making in the face of severe uncertainty.

Indeed, as discussed in criticism of info-gap decision theory, info-gap's robustness model can be interpreted as an instance of the general maximin model.

Bayesian analysis

As for Laplaces' principle of insufficient reason, in this context it is convenient to view it as an instance of Bayesian analysis.

The essence of the Bayesian analysis is applying probabilities for different possible realizations of the uncertain parameters. In the case of Knightian (non-probabilistic) uncertainty, these probabilities represent the decision maker's "degree of belief" in a specific realization.

In our example, suppose there are only five possible realizations of the uncertain revenue to allocation function. The decision maker believes that the estimated function is the most likely, and that the likelihood decreases as the difference from the estimate increases. Figure 11 exemplifies such a probability distribution.

Now, for any allocation, one can construct a probability distribution of the revenue, based on his prior beliefs. The decision maker can then choose the allocation with the highest expected revenue, with the lowest probability for an unacceptable revenue, etc.

The most problematic step of this analysis is the choice of the realizations probabilities. When there is an extensive and relevant past experience, an expert may use this experience to construct a probability distribution. But even with extensive past experience, when some parameters change, the expert may only be able to estimate that  is more likely than

is more likely than  , but will not be able to reliably quantify this difference. Furthermore, when conditions change drastically, or when there is no past experience at all, it may prove to be difficult even estimating whether

, but will not be able to reliably quantify this difference. Furthermore, when conditions change drastically, or when there is no past experience at all, it may prove to be difficult even estimating whether  is more likely than

is more likely than  .

.

Nevertheless, methodologically speaking, this difficulty is not as problematic as basing the analysis of a problem subject to severe uncertainty on a single point estimate and its immediate neighborhood, as done by info-gap. And what is more, contrary to info-gap, this approach is global, rather than local.

Still, it must be stressed that Bayesian analysis does not expressly concern itself with the question of robustness.

It should also be noted that Bayesian analysis raises the issue of learning from experience and adjusting probabilities accordingly. In other words, decision is not a one-stop process, but profits from a sequence of decisions and observations.

Classical decision theory perspective

Sniedovich[47] raises two objections to info-gap decision theory, from the point of view of classical decision theory, one substantive, one scholarly:

- the info-gap uncertainty model is flawed and oversold

- Info-gap models uncertainty via a nested family of subsets around a point estimate, and is touted as applicable under situations of "severe uncertainty". Sniedovich argues that under severe uncertainty, one should not start from a point estimate, which is assumed to be seriously flawed: instead the set one should consider is the universe of possibilities, not subsets thereof. Stated alternatively, under severe uncertainty, one should use global decision theory (consider the entire universe), not local decision theory (starting with an estimate and considering deviations from it).

- info-gap is maximin

- Ben-Haim (2006, p.xii) claims that info-gap is "radically different from all current theories of decision under uncertainty," while Sniedovich argues that info-gap's robustness analysis is precisely maximin analysis of the horizon of uncertainty. By contrast, Ben-Haim states (Ben-Haim 1999, pp. 271–2) that "robust reliability is emphatically not a [min-max] worst-case analysis".

Sniedovich has challenged the validity of info-gap theory for making decisions under severe uncertainty. He questions the effectiveness of info-gap theory in situations where the best estimate  is a poor indication of the true value of

is a poor indication of the true value of  . Sniedovich notes that the info-gap robustness function is "local" to the region around

. Sniedovich notes that the info-gap robustness function is "local" to the region around  , where

, where  is likely to be substantially in error. He concludes that therefore the info-gap robustness function is an unreliable assessment of immunity to error.

is likely to be substantially in error. He concludes that therefore the info-gap robustness function is an unreliable assessment of immunity to error.

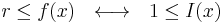

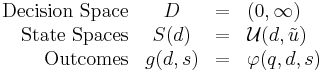

In the framework of classical decision theory, info-gap's robustness model can be construed as an instance of Wald's Maximin model and its opportuneness model is an instance of the classical Minimin model. Both operate in the neighborhood of an estimate of the parameter of interest whose true value is subject to severe uncertainty and therefore is likely to be substantially wrong. Moreover, the considerations brought to bear upon the decision process itself also originate in the locality of this unreliable estimate, and so may or may not be reflective of the entire range of decisions and uncertainties.

Background, working assumptions, and a look ahead

Decision under severe uncertainty is a formidable task and the development of methodologies capable of handling this task is even a more arduous undertaking. Indeed, over the past sixty years an enormous effort has gone into the development of such methodologies. Yet, for all the knowledge and expertise that have accrued in this area of decision theory, no fully satisfactory general methodology is available to date.

Now, as portrayed in the info-gap literature, Info-Gap was designed expressly as a methodology for solving decision problems that are subject to severe uncertainty. And what is more, its aim is to seek solutions that are robust.

Thus, to have a clear picture of info-gap's modus operandi and its role and place in decision theory and robust optimization, it is imperative to examine it within this context. In other words, it is necessary to establish info-gap's relation to classical decision theory and robust optimization. To this end, the following questions must be addressed:

- What are the characteristics of decision problems that are subject to severe uncertainty?

- What difficulties arise in the modelling and solution of such problems?

- What type of robustness is sought?

- How does info-gap theory address these issues?

- In what way is info-gap decision theory similar to and/or different from other theories for decision under uncertainty?

Two important points need to be elucidated in this regard at the outset:

- Considering the severity of the uncertainty that info-gap was designed to tackle, it is essential to clarify the difficulties posed by severe uncertainty.

- Since info-gap is a non-probabilistic method that seeks to maximize robustness to uncertainty, it is imperative to compare it to the single most important "non-probabilistic" model in classical decision theory, namely Wald's Maximin paradigm (Wald 1945, 1950). After all, this paradigm has dominated the scene in classical decision theory for well over sixty years now.

So, first let us clarify the assumptions that are implied by severe uncertainty.

Working assumptions

Info-gap decision theory employs three simple constructs to capture the uncertainty associated with decision problems:

- A parameter

whose true value is subject to severe uncertainty.

whose true value is subject to severe uncertainty. - A region of uncertainty

where the true value of

where the true value of  lies.

lies. - An estimate

of the true value of

of the true value of  .

.

It should be pointed out, though, that as such these constructs are generic, meaning that they can be employed to model situations where the uncertainty is not severe but mild, indeed very mild. So it is vital to be clear that to give apt expression to the severity of the uncertainty, in the Info-Gap framework these three constructs are given specific meaning.

Working Assumptions

- The region of uncertainty

is relatively large.

In fact, Ben-Haim (2006, p. 210) indicates that in the context of info-gap decision theory most of the commonly encountered regions of uncertainty are unbounded.- The estimate

is a poor approximation of the true value of

.

That is, the estimate is a poor indication of the true value of(Ben-Haim, 2006, p. 280) and is likely to be substantially wrong (Ben-Haim, 2006, p. 281).

In the picture

represents the true (unknown) value of

.

The point to note here is that conditions of severe uncertainty entail that the estimate

can—relatively speaking—be very distant from the true value

. This is particularly pertinent for methodologies, like info-gap, that seek robustness to uncertainty. Indeed, assuming otherwise would—methodologically speaking—be tantamount to engaging in wishful thinking.

In short, the situations that info-gap is designed to take on are demanding in the extreme. Hence, the challenge that one faces conceptually, methodologically and technically is considerable. It is essential therefore to examine whether info-gap robustness analysis succeeds in this task, and whether the tools that it deploys in this effort are different from those made available by Wald's (1945) Maximin paradigm especially for robust optimization.

So let us take a quick look at this stalwart of classical decision theory and robust optimization.

Wald's Maximin paradigm

The basic idea behind this famous paradigm can be expressed in plain language as follows:

Maximin Rule The maximin rule tells us to rank alternatives by their worst possible outcomes: we are to adopt the alternative the worst outcome of which is superior to the worst outcome of the others.

Thus, according to this paradigm, in the framework of decision-making under severe uncertainty, the robustness of an alternative is a measure of how well this alternative can cope with the worst uncertain outcome that it can generate. Needless to say, this attitude towards severe uncertainty often leads to the selection of highly conservative alternatives. This is precisely the reason that this paradigm is not always a satisfactory methodology for decision-making under severe uncertainty (Tintner 1952).

As indicated in the overview, info-gap's robustness model is a Maximin model in disguise. More specifically, it is a simple instance of Wald's Maximin model where:

- The region of uncertainty associated with an alternative decision is an immediate neighborhood of the estimate

.

. - The uncertain outcomes of an alternative are determined by a characteristic function of the performance requirement under consideration.

Thus, aside from the conservatism issue, a far more serious issue must be addressed. This is the validity issue arising from the local nature of info-gap's robustness analysis.

Local vs global robustness

The validity of the results generated by info-gap's robustness analysis are crucially contingent on the quality of the estimate  . Alas, according to info-gap's own working assumptions, this estimate is poor and likely to be substantially wrong (Ben-Haim, 2006, p. 280-281).

. Alas, according to info-gap's own working assumptions, this estimate is poor and likely to be substantially wrong (Ben-Haim, 2006, p. 280-281).

The trouble with this feature of info-gap's robustness model is brought out more forcefully by the picture. The white circle represents the immediate neighborhood of the estimate  on which the Maximin analysis is conducted. Since the region of uncertainty is large and the quality of the estimate is poor, it is very likely that the true value of

on which the Maximin analysis is conducted. Since the region of uncertainty is large and the quality of the estimate is poor, it is very likely that the true value of  is distant from the point at which the Maximin analysis is conducted.

is distant from the point at which the Maximin analysis is conducted.

So given the severity of the uncertainty under consideration, how valid/useful can this type of Maximin analysis really be?

The critical issue here is then to what extent can a local robustness analysis a la Maximin in the immediate neighborhood of a poor estimate aptly represent a large region of uncertainty. This is a serious issue that must be dealt with in this article.

It should be pointed out that, in comparison, robust optimization methods invariably take a far more global view of robustness. So much so that scenario planning and scenario generation are central issues in this area. This reflects a strong commitment to an adequate representation of the entire region of uncertainty in the definition of robustness and in the robustness analysis itself.

And finally there is another reason why the intimate relation to Maximin is crucial to this discussion. This has to do with the portrayal of info-gap's contribution to the state of the art in decision theory, and its role and place vis-a-vis other methodologies.

Role and place in decision theory

Info-gap is emphatic about its advancement of the state of the art in decision theory (color is used here for emphasis):

Info-gap decision theory is radically different from all current theories of decision under uncertainty. The difference originates in the modelling of uncertainty as an information gap rather than as a probability.

Ben-Haim (2006, p.xii)In this book we concentrate on the fairly new concept of information-gap uncertainty, whose differences from more classical approaches to uncertainty are real and deep. Despite the power of classical decision theories, in many areas such as engineering, economics, management, medicine and public policy, a need has arisen for a different format for decisions based on severely uncertain evidence.Ben-Haim (2006, p. 11)

These strong claims must be substantiated. In particular, a clear-cut, unequivocal answer must be given to the following question: in what way is info-gap's generic robustness model different, indeed radically different, from worst-case analysis a la Maximin?

Subsequent sections of this article describe various aspects of info-gap decision theory and its applications, how it proposes to cope with the working assumptions outlined above, the local nature of info-gap's robustness analysis and its intimate relationship with Wald's classical Maximin paradigm and worst-case analysis.

Invariance property

The main point to keep in mind here is that info-gap's raison d'être is to provide a methodology for decision under severe uncertainty. This means that its primary test would be in the efficacy of its handling of and coping with severe uncertainty. To this end it must be established first how Info-Gap's robustness/opportuneness models behave/fare, as the severity of the uncertainty is increased/decreased.

Second, it must be established whether info-gap's robustness/opportuneness models give adequate expression to the potential variability of the performance function over the entire region of uncertainty. This is particularly important because Info—Gap is usually concerned with relatively large, indeed unbounded, regions of uncertainty.

So, let  denote the total region of uncertainty and consider these key questions:

denote the total region of uncertainty and consider these key questions:

- How does the robustness/opportuneness analysis respond to an increase/decrease in the size of

?

- How does an increase/decrease in the size of

affect the robustness or opportuneness of a decision?

- How representative are the results generated by info-gap's robustness/opportuneness analysis of what occurs in the relatively large total region of uncertainty

?

Suppose then that the robustness  has been computed for a decision

has been computed for a decision  and it is observed that

and it is observed that  where

where  for some

for some  .

.

The question is then: how would the robustness of  , namely

, namely  , be affected if the region of uncertainty would be say, twice as large as

, be affected if the region of uncertainty would be say, twice as large as  , or perhaps even 10 times as large as

, or perhaps even 10 times as large as  ?

?

Consider then the following result which is a direct consequence of the local nature of info-gap's robustness/opportuneness analysis and the nesting property of info-gaps' regions of uncertainty (Sniedovich 2007):

Invariance Theorem

The robustness of decision  is invariant with the size of the total region of uncertainty

is invariant with the size of the total region of uncertainty  for all

for all  such that

such that

-

(7)  for some

for some

In other words, for any given decision, info-gap's analysis yields the same results for all total regions of uncertainty that contain  . This applies to both the robustness and opportuneness models.

. This applies to both the robustness and opportuneness models.

This is illustrated in the picture: the robustness of a given decision does not change notwithstanding an increase in the region of uncertainty from  to

to  .

.

In short, by dint of focusing exclusively on the immediate neighborhood of the estimate  info-gap's robustness/opportuneness models are inherently local. For this reason they are -- in principle -- incapable of incorporating in the analysis of

info-gap's robustness/opportuneness models are inherently local. For this reason they are -- in principle -- incapable of incorporating in the analysis of  and

and  regions of uncertainty that lie outside the neighborhoods

regions of uncertainty that lie outside the neighborhoods  and

and  of the estimate

of the estimate  , respectively.

, respectively.

To illustrate, consider a simple numerical example where the total region of uncertainty is  the estimate is

the estimate is  and for some decision

and for some decision  we obtain

we obtain  . The picture is this:

. The picture is this:

where the term "No man's land" refers to the part of the total region of uncertainty that is outside the region  .

.

Note that in this case the robustness of decision  is based on its (worst-case) performance over no more than a minuscule part of the total region of uncertainty that is an immediate neighborhood of the estimate

is based on its (worst-case) performance over no more than a minuscule part of the total region of uncertainty that is an immediate neighborhood of the estimate  . Since usually info-gap's total region of uncertainty is unbounded, this illustration represents a usual case rather than an exception.

. Since usually info-gap's total region of uncertainty is unbounded, this illustration represents a usual case rather than an exception.

The thing to note then is that info-gap's robustness/opportuneness are by definition local properties. As such they cannot assess the performance of decisions over the total region of uncertainty. For this reason it is not clear how Info-Gap's Robustness/Opportuneness models can provide a meaningful/sound/useful basis for decision under sever uncertainty where the estimate is poor and is likely to be substantially wrong.

This crucial issue is addressed in subsequent sections of this article.

Maximin/Minimin: playing robustness/opportuneness games with Nature

For well over sixty years now Wald's Maximin model has figured in classical decision theory and related areas – such as robust optimization - as the foremost non-probabilistic paradigm for modeling and treatment of severe uncertainty.

Info-gap is propounded (e.g. Ben-Haim 2001, 2006) as a new non-probabilistic theory that is radically different from all current decision theories for decision under uncertainty. So, it is imperative to examine in this discussion in what way, if any, is info-gap's robustness model radically different from Maximin. For one thing, there is a well-established assessment of the utility of Maximin. For example, Berger (Chapter 5)[66] suggests that even in situations where no prior information is available (a best case for Maximin), Maximin can lead to bad decision rules and be hard to implement. He recommends Bayesian methodology. And as indicated above,

It should also be remarked that the minimax principle even if it is applicable leads to an extremely conservative policy.

Tintner (1952, p. 25)[67]